The hard problem in content research with LMs isn’t “answer one question from one doc.” It’s “answer one question over a lot of stuff”: a knowledge base, hundreds of posts, competitor pages. What do we already say about X? What’s the thread across all of it? Two options today: dump everything in one prompt and hit context rot (performance degrades as context grows even when it fits), or build retrieval and chunking and hope the retriever surfaces the right slices. Recursive language models offer a third path: the model treats context as something it operates on (peek, grep, partition, summarize) instead of a single blob. One logical request, no single giant forward pass, no retriever required.

How it works

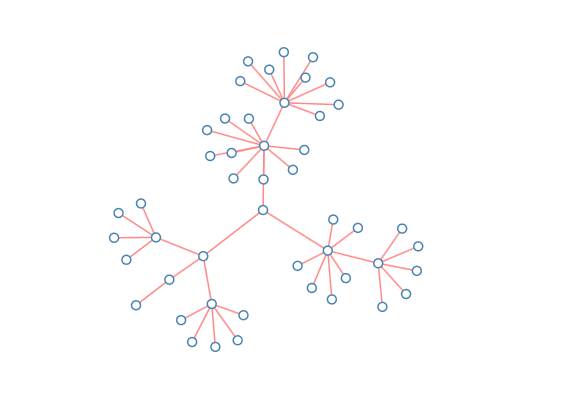

Instead of handing the model one giant context blob, you give it an environment. In the published work, that’s a REPL with the context in a variable. The model never sees the full context. It sees that it exists and can operate on it: peek at the first N lines, grep for a pattern, split into chunks and run sub-queries (e.g. a smaller model) on each chunk, summarize subsets and reason over the summaries. From the caller’s perspective it’s still one request in, one answer out. Under the hood the model chooses how to decompose the context so no single forward pass has to attend over the whole thing.

So the shift is: context isn’t “everything in the prompt.” It’s an object the model interacts with. The model picks the interaction (peek, filter, partition, summarize) instead of you designing a retrieval pipeline or a fixed chunking scheme.

Context rot isn’t mainly a capacity limit; it’s a quality limit. Longer sequences are often out of distribution, and attention over huge context degrades. So the fix isn’t bigger windows. It’s never putting the whole context in one call. Let the model pull in only what it needs, when it needs it. On hard long-context benchmarks, recursive setups with a smaller model have been shown to beat single-call with a larger one, and to hold quality at 10M+ tokens where baselines and retrieval-based agents drop off. The gain comes from decomposition, not from raw context size.

Task decomposition vs context decomposition

Most agent systems are built around task decomposition: we define the steps (search, read, summarize). RLMs are built around context decomposition: the model decides how to slice and query the material. That’s a different lever. For research over a corpus, the right slice often depends on the question and the data: API docs vs. blog archives vs. competitor pages. Letting the model choose the decomposition (peek, grep, partition, summarize) makes “one question over everything” plausible without hand-built retrieval or chunking heuristics.

What you can do today

You don’t need a full RLM stack to use the same intuition. Peek: sample the start or structure of the corpus before asking the main question. Filter: grep or keyword-narrow to a subset, then prompt on that. Chunk and map: split by doc or section, run “summarize” or “extract main point” per chunk, then ask the real question over the summaries. You’re approximating “the model decomposes the context” by hand. Less flexible, but same idea, and it already makes long-context research more tractable.

The takeaway

The limit on research over large corpora isn’t context window size. It’s whether the model can use the context instead of swallowing it whole. Recursive language models make that the default: one request, the model drives the decomposition, and you can scale to huge context without one huge forward pass and without building a retriever. The work is still research-grade, but the principle is the one that matters, and it’s the right direction for anyone doing content research at scale.

Further reading

Try Waldium